I was reading the other day about the Tube Challenge, where people attempt to visit all 270 stations on the London Underground in the quickest possible time. Apparently successful routes are a closely-guarded secret, which got me thinking about how difficult an optimisation problem it must be to find the best route.

This is the travelling salesman problem, long-studied and apparently essentially solved. The object is to visit a set of points exactly once in the shortest possible distance. For a collection of points, the number of possible routes scales like

, so it quickly becomes impractical to check every possible route.

Instead, a number of approximate algorithms are used to find solutions which are acceptably close to the optimum. These operate vastly more quickly, and can approach the optimum to within a few percent. One such algorithm is simulated annealing, which is designed to mimic the physical annealing process in, e.g., metals.

In a physical system of energy and temperature

, the probability of being in a state

compared to a state

is

where is Boltzmann’s constant and I’ve neglected here a possibly energy-dependent density of states

. At high temperatures, a physical system with a large number of degrees of freedom can happily jump around between energy levels as their relative probabilities tend to 1. The system is free to explore its ‘state space’ without penalty. As the temperature is reduced, the system will begin to preferentially occupy lower-energy states. In the limit that the system is able to explore all possible states, at zero temperature it must occupy the lowest-energy ground state. In metallurgical annealing, a metal is heated so that it may freely reconfigure its internal structure, then cooled into a new and more favourable low-energy state. This process may theoretically occur at room temperature, but is exponentially slower. Annealing then is a way of forcing the metal out of a local minimum and into a new one.

If an optimisation problem can be recast into a form where there is some energy equivalent to be minimised, the simulated annealing algorithm approximates this physical process and attempts to find a ground state.

In the travelling salesman problem the required property to minimise is the total length of the path, i.e. for points at

the quantity

We then need to allow the system – the path – to explore its state space. The path is determined by the order in which the points are visited, and so is just a list of indices.

The simplest perturbation is then to swap any two elements of the list, which changes the shape and length of the path. The crucial step in simulated annealing is deciding whether to accept this change. The probability that we should swap is borrowed from physics:

If the path becomes shorter, then and we make the swap regardless of the temperature. Importantly, there is some probability that even for

we make the swap anyway. This allows the path to get longer some of the time, which helps to avoid getting stuck in local minima. At high temperatures the path will get longer and shorter at roughly the same frequency, but as we lower the temperature the chance of path length increasing drops quickly.

As a concrete example, take 50 points in the unit square and initialise a random path which connects them. To choose the initial temperature, I do 1,000 random swaps to check what the typical ‘energy’ penalty is (technically I find ). I set the temperature

a low multiple of this, which allows the path to initially vary a lot and minimises the importance of the particular path I began with.

I then successively lower the temperature at every iteration step as

, where

is some constant which determines the cooling rate.

First, at high cooling rate (low ) the path quickly settles on a configuration. The temperature is too low to jump out of this minimum so there is no choice but to stick with this path.

When the system cools a little more slowly (higher ), the path is able to vary a little more and consequently is able to find a slightly shorter path.

Clearly, it is important to control the way the system cools if we are to get the best performance from this algorithm. The method used here is the simplest possible, other methods involve the integration of an ODE for the temperature as a function of time and can yield more optimal results.

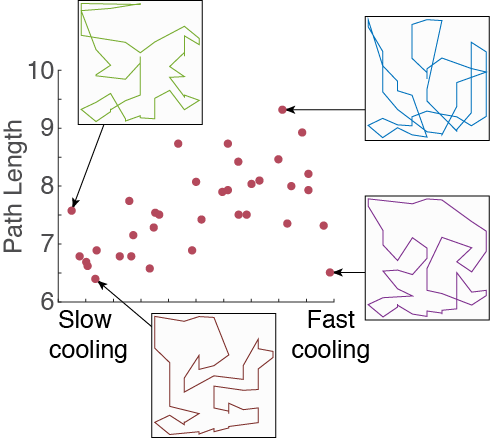

Sticking with the above example, I take a hundred or so random paths and perform 100,000 annealing iterations at different cooling rates, plotting the length of the resultant path as a function of cooling rate.

There is a slight tendency for lower cooling rates to lead to shorter paths, though there is significant spread depending on the original shape of the path. Of course, a slower cooling rate means the algorithm takes longer to run so a tradeoff must be made.

There is a slight tendency for lower cooling rates to lead to shorter paths, though there is significant spread depending on the original shape of the path. Of course, a slower cooling rate means the algorithm takes longer to run so a tradeoff must be made.

Knowing a little more about simulated annealing, I turn my attention towards the tube problem. From an earlier post I have some information about the London underground network. In the spirit of the tube challenge I exclude the DLR and Overground, and enforce the rule that every station should only be visited once. This leads to a collection of 266 stations, which will be substantially slower than the problem considered above of 50 points. After some fiddling, I made some small changes to the algorithm.

Rather than adopting a simple cooling law, I make things a little more complicated by dropping the temperature more quickly if a run of successful swaps are made, hoping that I descend into a deep likely-looking minimum rather than jumping out of it. I also preferentially target portions of the path which are excessively long – 20% of the swaps are made for points which make up the top 10 longest sections of the path. I also adjust the cost function – rather than minimising length of path, I minimise time to traverse the path. Sections which are travelled by tube are assumed to progress at 50kph, while walking sections between stations are assumed to progress at 5pkh. Any decent algorithm should reproduce the common-sense approach: go by tube wherever possible.

As a simple test case, I consider first just the Bakerloo line in isolation. This is a simple line with no branch points, so lets see what the computer thinks. In the following walking parts of the path are dotted blue lines, and tube sections are solid lines coloured by the tube line colour.

Well, you might think it’s common sense to get the tube from A to B rather than haphazardly walk around London, but you can rest assured that your flimsy human intuition is now backed up by solid computational experiment.

That was an easy one, what about the Central line with branches and a loop?

This one takes a little longer to converge, and I had to cheat a bit by manually picking the start and end points of the path – the algorithm would usually get one or the other right but rarely both. The final path looks largely sensible, small walking sections where absolutely necessary, with tube journeys making up the majority of the path.

With high hopes I loaded in the full complement of stations and left my laptop to chunder through a few million annealing steps overnight. There is convergence after 2 million steps or so, but the final path isn’t exactly perfect.

Clearly we are expected to jog most of the way around London, taking the odd 5-minute tube journey to relax. The total time is a decidedly less-than-optimal 55 hours, the vast majority of which is spent walking long distances (Heathrow to Ruislip? Really?). However there is some promise there, the decision to take the District line south and walk to Morden is eminently sensible, and one used in the suggested route.

It is possible that slower cooling will lead to a better path, or perhaps more clever control of the cooling rate. It would also be simple to rewrite in C++ or similar, which will run orders of magnitude more quickly than Matlab.

If anyone has any suggestions let me know in the comments.

My first guess for a way to improve would be to do each line individually, then run a round where the only modification it can make is interrupting a train trip between two stations (or at a start/finish) for a walk (and only if a route connects back to where it left off), giving a good initial state for the second round where more drastic changes in route can be made. I’ve never played with this algorithm so I’m not sure how useful that actually is.

LikeLike

This is the first time I’ve played with the algorithm too, so I’m sure there is much to be done in terms of implementing it correctly! You have a point though, perhaps work out where it is possible to jump from line-to-line in walking distances below some given length, then brute-force your way through the now much lower number of permutations.

LikeLike

Hello Jason,

Thanks for yet another excellent blog post.

It would be awesome if you could share with us the positions of the stations and the assumed distances between them (unless your assuming straight lines), and let’s see if anyone can beat your time!

Best,

Nikos

LikeLike

Hi Nikos, thanks, glad you like the post! I got the tube data from here:

http://commons.wikimedia.org/wiki/London_Underground_geographic_maps/CSV#Routes

The positions are in latitude and longitude, so there is a slight complication in working out kilometre distances between stations, but nothing too complicated.

LikeLike

Lovely presentation of the simulated annealing, particularly the quick animations of the 50-point problem. I wonder if the challenge in your final tube solution is the limitation that you can only visit each station once? You can obviously walk from any station to any other, but can only catch the tube to a limited few and potentially just one at the end of the line.

The alternative premise is to set this up as the garbage truck problem, which requires you to visit every location but does not restrict you from driving back along ‘clean’ streets at high speed to connect to the next loop.

LikeLiked by 1 person

Hi Jason, thanks for the comment. I think you’re right, in the real challenge you’re allowed to revisit stations as long as you visit all of them. The main reason I chose the only-visit-once rule is that it was easy to program – just permute a fixed-length list of stations. With the garbage-truck problem you’d have to allow the path to increase the number of stations it visits too. I imagine this would increase the average energy penalty of a path ‘perturbation’, so the initial temperature should be higher – the path length might have to increase a lot before it can shrink into a minimum. Interesting point though, and probably something I’ll come back to in the future.

LikeLike

The restriction not to visit a station twice is overly restrictive. You can keep it for computational efficiency’s sake, but only if you first transform your adjacency matrix by running Floyd-Warshall on it.

LikeLike

Hi Arthur, I know, I kept that rule for simplicity of implementation! As an ignorant physicist I’ve never heard of the Floyd-Warshall algorithm, but I’ll be sure to take a look, cheers.

LikeLike

Concorde (http://www.math.uwaterloo.ca/tsp/concorde.html) is, as far as I know, currently the most efficient solver for TSP instances. Instead of trying to invent your own solutions for which you can’t even prove any properties (even for the rather simple Christofides algorithm (http://en.wikipedia.org/wiki/Christofides_algorithm) for solving instances of the metric TSP, you can prove that it will find a solution that has value of at most 3/2 of the optimal solution), use the hard work that mathematicians did on the TSP for decades.

If you really like to write your own solution, I would highly recommend to start with the subtour elimination formulation of the TSP problem (http://people.mpi-inf.mpg.de/~rbeier/opt2001/held_karp.pdf). Even though it has an exponential number of inequalities, this is no problem, since they can be separated in polynomial time (i. e. given a point you can find a violated inequality in polynomial time). Use this in a cutting plane algorithm. For getting from the potential fractional solution of this LP relaxation to a tour, you can either

* use an exact way (say, branch and bound (http://en.wikipedia.org/wiki/Branch_and_bound) or even better, but more complicated to implement branch and cut (http://en.wikipedia.org/wiki/Branch_and_cut) – if you really want to go this second way, best use split cuts (or equivalent, but easier to implement: Mixed Integer Rounding (MIR) cuts))

* use randomized rounding (if some variable has value 0 < x_i < 1) set it to 1 with probablility x_i and 1 with probability 1-x_i

* Use additional classes of cutting planes for the TSP (facet-defining ones, say comb inequalites, are of cause the best ones that you can get).

TLDR: I really can't understand why computer scientists and engineers like Simulated Annealing so much. There are much better ways to solve combinatorial optimization problems. Very often methods from polyhedral combinatorics yield pretty good results.

Wolfgang Keller

Graduate student in mathematical optimization

LikeLike

Hi Wolfgang, thanks for the thorough and authoritative comment. As you’ve probably guessed, I have very little knowledge of optimisation algorithms, I picked simulated annealing here as it was visually appealing and some analogy to physics, which I do know about! (Graduate student in experimental physics here, quite far from mathematical optimisation methods) I’ll take a look at Concorde thanks, it seems I can upload my problem to test out there.

Cheers, Jason

LikeLike

Had to make this quick note in the middle of the read: “Annealing then is a way of forcing the metal out of a local minimum and into a new one.” << BEST description of annealing that I have ever seen! Nice writing 🙂

LikeLike

Hi Laura, thanks!

LikeLike